Design Portfolio

Leading UX design for one enterprise platform and two cross-cutting microservices serving multiple domains: EPA emissions data for researchers, energy sector intelligence for AEs, and custom calculations for analysts. Design strategy spans user research, information architecture, data visualization, and interaction design for complex industrial data.

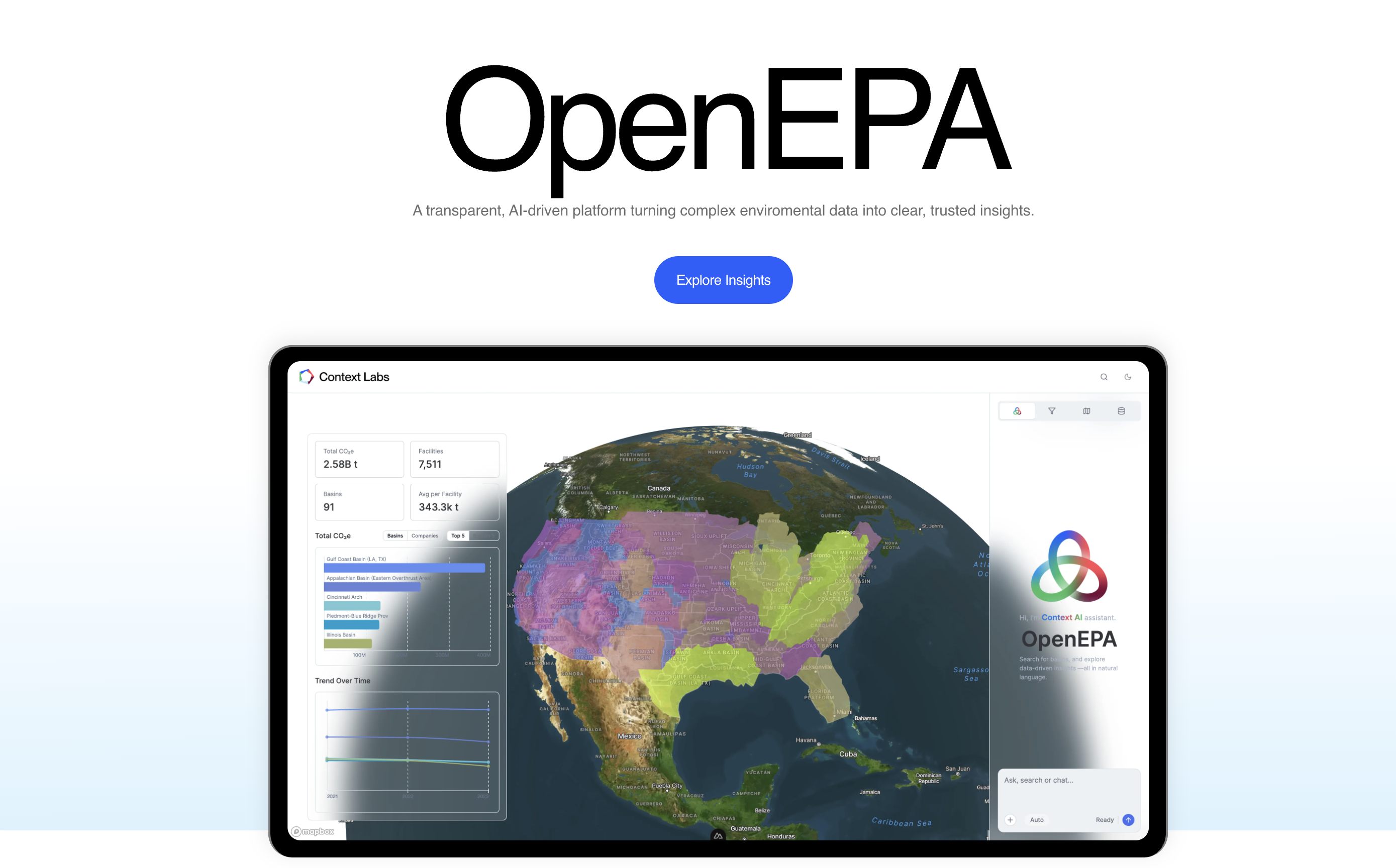

OpenEPA Platform

PilotLed UX research and information architecture for EPA emissions platform serving researchers and journalists. Designed data visualization for 15 years of history, citation/provenance UX patterns, and AI Q&A interfaces balancing natural language with data precision.

2,800+ facilities • 15 years data • 99.9% uptime

Context AI Microservice

LiveDirected UX design for AI-powered intelligence tool. Led user research with energy sector AEs, designed conversational UI patterns, and built production-ready design system using Vue 3 + TypeScript achieving 95% efficiency gain through user-centered workflow redesign.

2+ hours to 5 min prep • $85M pipeline • 200+ hrs/mo saved

Calculation Editor Microservice

In DevelopmentLed interaction design for calculation transparency microservice. Pioneered UX patterns for industrial formula documentation, methodology disclosure, and audit trails meeting academic reproducibility requirements—establishing new design standards for trustworthy data analytics.

Academic-grade reproducibility • Cross-platform